In brief

- The growing accessibility of computer resources has led to the flourishing of AI solutions to automate mechanical and repetitive actions. This helps drive competitive advantage for businesses by reducing their dependency on expensive human capital

- Conversational AI is a set of tools and models that use AI/ML to try and mimic human communication

- We have been researching, experimenting, and working with those tools to automate tasks for our clients. This article describes our experience with Conversational AI embedded in chatbot applications based on services offered by the main providers in today’s market

Automating mechanical and repetitive actions within an organization is a key factor in providing competitive advantages to businesses. This is because many processes demand significant amounts of human capital, which is both expensive and could be better utilized in more fulfilling and productive activities. The growing accessibility of computer resources has led to the flourishing of AI solutions, and our Data Science department at Zoreza Global boasts multiple teams dedicated to AI/ML technology.

One application leveraging AI/ML is conversational AI, a set of tools and models which attempt to mimic human communication. conversational AI can be placed in any situation where there is communication between two entities, the client entity is a single person or group of people, the server entity is a computer application powered by Artificial Intelligence. This branch of AI is also a sought-after technology for building brand authority due to its increasing popularity within AI trends, showing business’ capabilities of incorporating cutting edge digital innovation.

We have been researching, experimenting, and working with those tools to automate tasks both for our internal needs and those of our clients. What we will be showing is our experience with conversational AI embedded in chatbot applications, leveraging services offered by the main providers in today’s market.

Cloud-based conversational AI

Despite the emergence of language models like ChatGPT, Conversational AI platforms such as Dialogflow and Lex continue to hold relevance in the field. These platforms offer a range of specialized functionalities that cater specifically to the creation of chatbot applications. With pre-built components, intent recognition, entity extraction, and context management, they provide developers with the tools needed to build robust conversational interfaces efficiently.

The tools we will show — which build chatbots — come from the leading cloud platforms GCP, Azure and AWS.

First, the offerings from these providers will be described. In the second part, the relevant functionalities of chatbots for each cloud platform will be shown through examples. Eventually it will be followed by a summary and some considerations.

GCP Dialogflow

Google Cloud Platform offers the Dialogflow service with two editions, Essentials and CX.

Figure 1. The Dialogflow Essentials web interface for developing chatbots

Dialogflow Essentials is the market reference for building chatbots as it is the most widely used, known in 2016 as API.AI, the year when Google bought it.

Each chatbot application can have different shapes as well as complexity. The capabilities of each tool are assigned following the “Chatbot size” metrics:

Figure 2. Chatbot size and complexity metrics

Dialogflow Essentials accommodates small to medium chatbot sizes.

The second edition is called CX and is the newest Google service with the most advanced features on the market. This tool is useful for medium to big chatbot applications. Of course, it’s possible to build a small project, but Google’s intentions are not to deprecate ES, which is preferred to CX for those simple tasks due to its ease of development and pricing.

Figure 3. Dialogflow CX web interface for developing chatbots

Regardless of the edition, they have state-of-the-art ML models like BERT and are backed up by the GCP ecosystem.

Dialogflow ES has plenty of in-build integrations, being ubiquitous, it comes with a lot of documentation and uses cases from the development community. In Zoreza Global, depending on client requirements, the best fit is chosen between Essentials and CX. Our experience leans towards developing ES, mainly due to the many in-build integrations available.

AWS

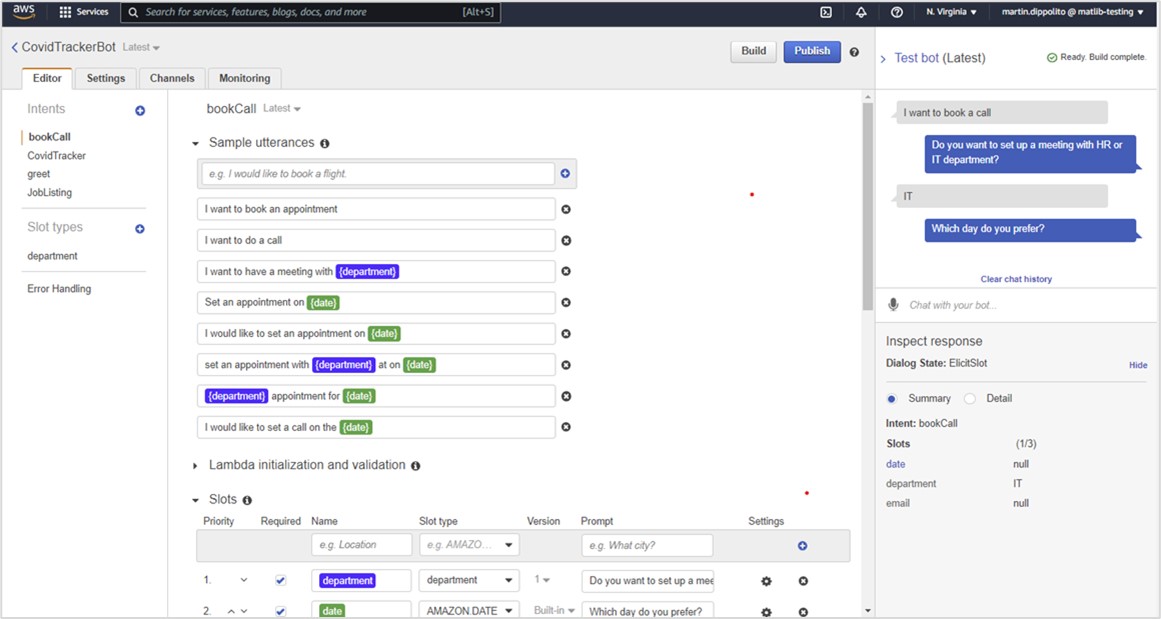

Lex is the Conversional AI service delivered by AWS. As of now, two versions are available, V1 and V2.

The first edition (V1) is a good choice for small to medium chatbot applications. It must be noted that it will eventually migrate to Lex V2 and the AWS team is working towards allowing an easy migration from the older service to the newer.

Figure 4. AWS Lex V1 web browser interface

The V2 version is a refactoring effort by AWS to enable better workflows and advanced features for developers. Those familiar with AWS V2 will find the web interface adheres to the latest UI style chosen by AWS. Due to the nature of the services, and unlike Dialogflow, AWS intentions are to replace V2 with V1 hence the complexity of their chatbots is intended for small to medium sizes.

Figure 5. AWS Lex V2 web browser interface

Development in V2 is easier and more intuitive than V1 which will be eventually phased out. But like Google Dialogflow, the V1 maturity and community shared knowledge make it easy to integrate with external services and extend bot functionality, this is true at the moment of writing.

Microsoft

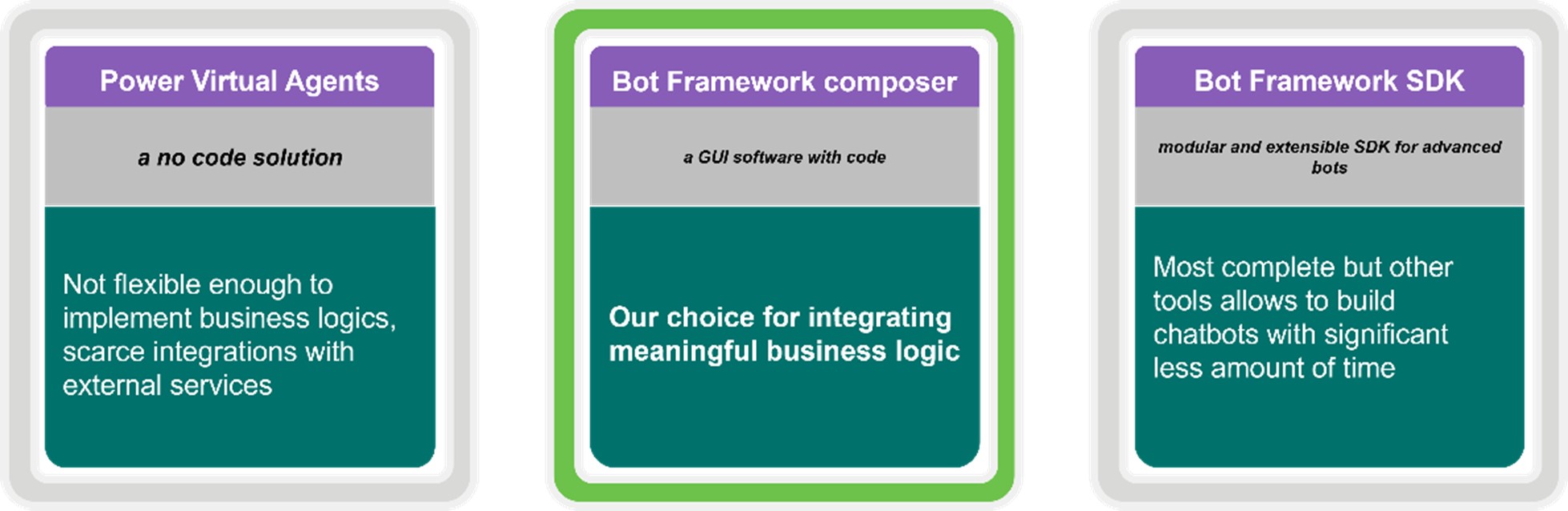

Microsoft has three ways of building chatbots:

- Power Virtual Agents, a no code solution powered by the Power ecosystem

- Microsoft Bot composer, a GUI application

- Microsoft bot framework API, a modular and extensible SDK for developing chatbots in Python or C#

Figure 6. Microsoft chatbot services, Bot Framework composer was selected as our choice

The first solution is too weak to implement several business logics, the latter is too advanced and requires too much development for a regular project. Therefore, the Bot composer is a good compromise offering a GUI interface with aided no code development which can be modified and extended by Javascript or C#.

Figure 7. Microsoft Bot Composer as a desktop application in Windows 10

The interface of the program highlights the need to locally install the software which does not have an available hosted cloud solution. The application is available on the main OSs i.e., Windows, Mac OS and Linux.

Although it can be used to build chatbots with any complexity, from our experience it is suitable for medium projects because for small ones it requires too much effort and probably Power Virtual Agents is more suitable. For Big projects, it lacks the easy implementation of external integrations to services like DB integration.

Demo

Here are some examples with code shown by using Dialogflow Essentials, Lex V1, Bot composer.

The main demo features shown are:

- Automation of processes

- Integration with cloud services and external sources

- Preset Rich responses

- Implementation of automated FAQ system

- Deployment modalities

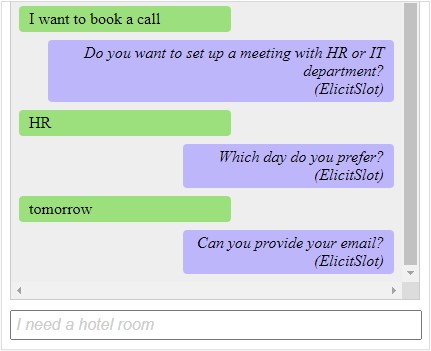

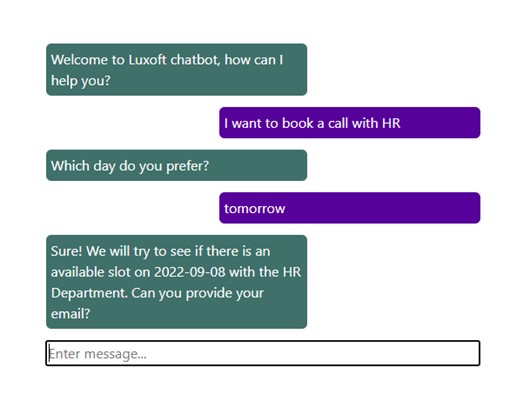

Automation

For example, let’s pretend we design a dialog that wants to set up a call. The system must collect a user’s email, a date and a department between HR and IT to fulfill this intent. The use of chatbots allows all this information to be collected at once and can be scaled up to many more clients than a limited group of people can handle.

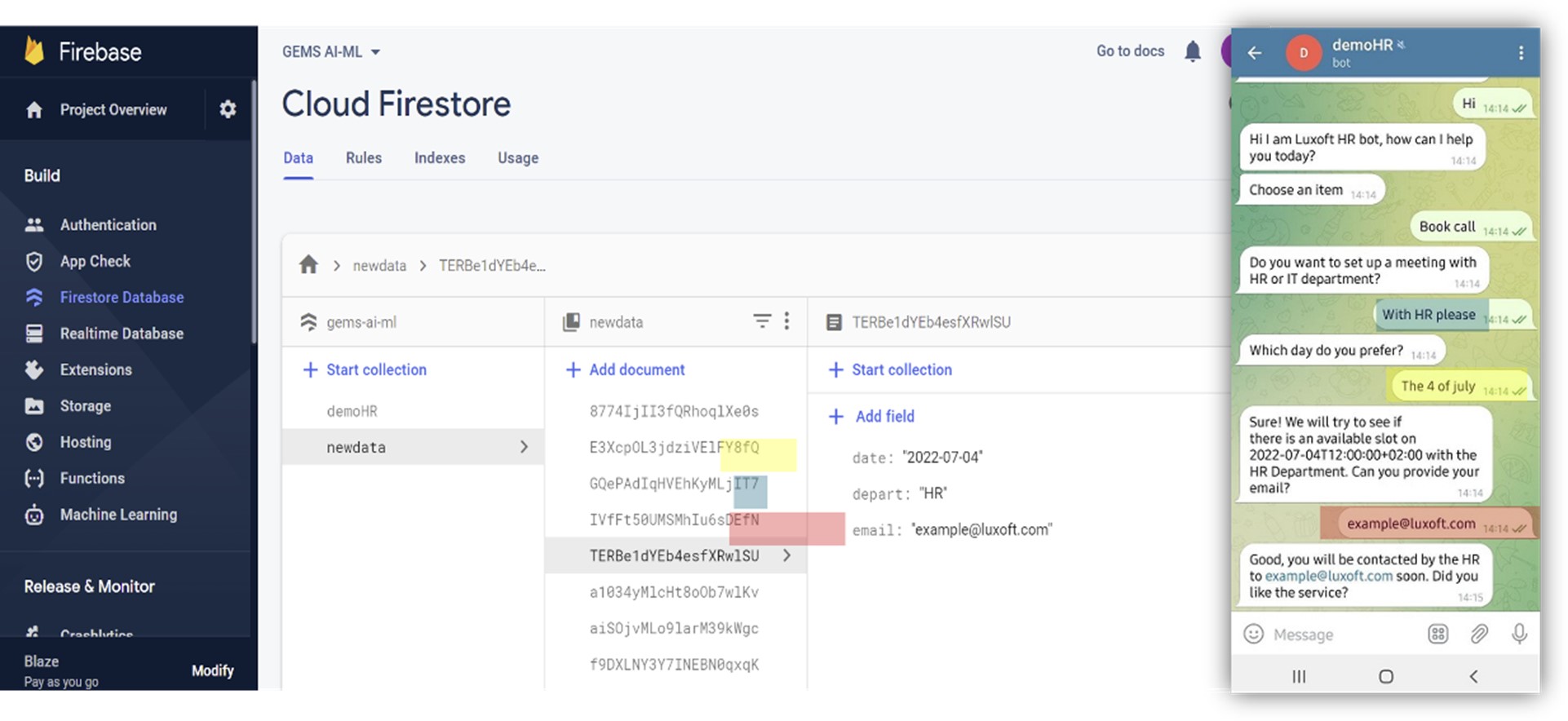

Figure 8. From left to right: Google Dialogflow Essentials integrated on Telegram, AWS Lex V1 embedded test tool, Microsoft Bot Framework Composer embedded test tool

Dialogflow is presented through a Telegram integration and the UI refers to the Android application. For Lex and Bot composer, you can see the service test implementation displayed.

All three platforms make it easy to build this kind of conversations with no-code process.

External integration — Cloud functions

With Cloud functions, it is possible to extend the functionality of chatbots thanks to the connection to external services. GCP powers the Cloud functions, and Dialogflow has, by default, a single JavaScript script to which it is possible to integrate external services. AWS has the Lambda functions and Lex can implement a function for each intent. Azure has the Azure functions service, but within Bot composer only HTTP requests can be implemented, making the process of communicating externally complicated.

A very interesting functionality consists in extending the scope of the bot by collecting the data the user types.

Google embedded system

Figure 9. Google Firebase web interface with the Firestore service collecting data from Google Dialogflow

Here we can see the task of collecting data from the chatbot to a storage service. Dialogflow can send and receive data from the Firestore Database thanks to a GCP Cloud function. Here, the parameters {email, Department, date} are stored on the Database whenever the user types them during the automation task of booking a call.

In order to implement this feature, the developer should navigate to the left menu on Fulfillment and edit the code in the inline editor.

Figure 10. Google Dialogflow inline cloud function written in JS

The code is written in Node.js and can also be edited on the GCP Console. The following code connects the chatbot to Firebase.

'use strict';const functions = require('firebase-functions');

const {WebhookClient} = require('dialogflow-fulfillment');const admin = require('firebase-admin');

admin.initializeApp();

const db = admin.firestore();

exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({ request, response });

var last_body = request.body.queryResult;function send_book_data_firebase(agent) {let email = agent.parameters.email;

let depart = last_body.outputContexts[0].parameters.Department;

let date_sel = last_body.outputContexts[0].parameters.date;db.collection('dialogflow_firestore').add({

email: email,

depart: depart,

date: date_sel

});

}

let intentMap = new Map();

intentMap.set('set-call-email', send_book_data_firebase);

agent.handleRequest(intentMap);

}); Below, we will explain each block of code.

'use strict';const functions = require('firebase-functions');

const {WebhookClient} = require('dialogflow-fulfillment'); ‘use strict’ indicates that the code should be executed in the ‘strict mode’. With strict mode, you cannot, for example, use undeclared variables. The two imports allow you to connect to the Dialogflow service.

…

const admin = require('firebase-admin');

admin.initializeApp();

const db = admin.firestore();

exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({ request, response });

var last_body = request.body.queryResult;

… The first three lines initialize the connection with Firestore, the latter lines of code handle the message sent from Dialogflow when the user types text along with several other pieces of information (such as the parameters collected so far).

…

function send_book_data_firebase(agent) {let email = agent.parameters.email;

let depart = last_body.outputContexts[0].parameters.Department;

let date_sel = last_body.outputContexts[0].parameters.date;db.collection('dialogflow_firestore').add({

email: email,

depart: depart,

date: date_sel

});

}let intentMap = new Map();

intentMap.set('set-call-email', send_book_data_firebase);

agent.handleRequest(intentMap);

}); The send_book_data_firebase function collects the attributes for email, department and date and sends them to the Firestore collection called ‘dialogflow_firestore’.

The three lines assure the function send_book_data_firebase is run whenever the intent of ‘set-call-email’ is detected by the Dialogflow service.

Cross-platform integration

Thanks to the serverless microservices, it is possible to integrate cross-platform cloud services.

One of our experiments uses the Lambda function of AWS Lex to store data on Firestore by installing the library on node.js. The user types in an AWS Lex bot and the data is consumed in the Google Firestore database service.

Figure 11. Data stored through integration between AWS Lex V1 with the AWS Lambda function and Firestore database

This means we are not bound only to the service cloud platform, but it can virtually expand to other services. To achieve those results, the AWS lambda function should be employed by using the node package ‘firebase-admin’.

Rich responses

All services have prebuilt templates allowing users to provide rich responses.

Figure 12. Cardboard rich response for Dialogflow, Lex, Bot composer respectively

For each service, an example could be the response of where the user can find jobs in our career portal.

Lex implementation of cards is the easiest, the developer only has to fill in a form, and it comes with an image preview too.

Figure 13. Integrate rich response in AWS Lex V1

For Dialogflow, this functionality is made available by JavaScript coding on the inline editor or GCP console on the Cloud functions service.

const {Card, Suggestion} = require('dialogflow-fulfillment');exports.dialogflowFirebaseFulfillment = functions.https.onRequest((request, response) => {

const agent = new WebhookClient({ request, response });…function luxoft_career(agent) {

agent.add(`Nice, here you can find our career portal`);agent.add(new Card({

title: `Open vacancies`,

imageUrl: 'https://career.luxoft.com/',

text: `Find Your Opportunity Here`,

buttonText: 'Career wesite',

buttonUrl: 'https://career.luxoft.com/'

})

);

}…

intentMap.set('luxoft-career', luxoft_career);

… Likewise, Firestore database integration, the ‘intentMap.set’ methods trigger the ‘luxoft_career’ function when the correct intent is matched. In this case, the rich response is added by instantiating a Card object and filling the dictionary with the required information.

Bot composer uses the Hero card response where through a special syntax of Bot composer, the response should be declared as ‘HeroCard’ and each newline must be filled with a piece of key information and its value.

Figure 14. Steps to integrate a rich response in Bot Composer

FAQ feature

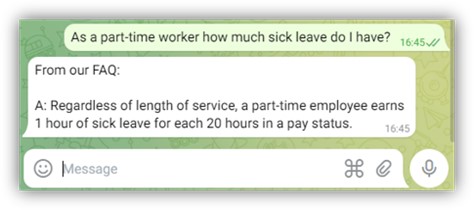

Application products might need to integrate an FAQ functionality to extend chatbot capabilities. In Google Dialogflow and Azure, there are built-in mechanisms for extracting knowledge from a semi-structured FAQ document. Both Dialogflow and Azure offer this service for free up to a limited set of documents. Lex, unfortunately, lacks this feature.

Figure 15. One of the questions in a FAQ document

For example, let’s pretend we have a document with Questions and Answers that was trained for FAQ functionality in both Dialogflow and Bot composer. Here we have selected only one of the questions.

Figure 16. Dialogflow FAQ integrations by means of Knowledge connector

Google Dialogflow Essentials has the Knowledge connector where the developer uploads a FAQ document where Machine learning models of the NLU (Natural Language Understanding) type extracts the questions and answers. The latter will always be the same whereas for questions, an archetype knowledge is embedded inside the models. Hence users are not bound to pose questions as in the trained ones.

If we rephrase the question, it is possible to see that the bot service understood the question and responded with the right answer.

Figure 17. Bot composer FAQ integrations by means of Q&A Azure service

Here, in fact, the original question is different from the one we fed into the chatbots.

Deployment

Once the chatbot is completed, it must be made available to users. Google Dialogflow is the only service allowing simple HTML integration by means of an iframe tag.

By simply copying and pasting the provided tag on integrations it is possible to start using the service.

Figure 18. iFrame code provided by Google Dialogflow Essentials for HTML integration

Of course, custom implementation is the best fit for integrating those services as the backend of several applications and platforms. For Bot Composer, the chatbot must be connected to the Azure Bot service whereas for the other two services, custom integration is made easier by an SDK provided by Google and AWS.

For AWS Lex, an example could be JS integration. It can be as simple as loading the library from a source on an index.js script.

Figure 19. Custom implementation with JavaScript and HTML/CSS with Lex as backend

For Google Dialogflow, we also built a custom application with Flask, a Python package for building web applications.

Figure 20. Custom implementation with Python Flask package with Google Dialogflow as Backend

Pricing

The pricing with regard to texts varies between services.

Figure 21. Raw estimate costs are for Text based communication only

Dialogflow turns out to be the most expensive for 1000 texts. Lex and Bot composer are the cheapest for texts only, although the latter requires additional costs as Azure services must be provisioned.

There is also a free trial for each service, Dialogflow is the cheapest alternative followed by Bot composer and Lex.

Summary

Dialogflow is the most suitable tool for quick “Proof of Concepts” thanks to several deployment options. It has no infrastructure costs, and it has the richest in features. For high message volumes, its competitors might be more cost-effective.

Lex also does not have relevant infrastructure costs and is a good candidate for delivering “proof of concepts” by customizing the front-end interface. For high usage, it can result in a cost-efficient tool, and thanks to the one-to-one mapping between intents and lambda functions, it’s highly customizable.

Bot composer is a good alternative for high usage applications, but more assumptions must be made during the early design of the final product. Infrastructure costs and deployment can slow down the development process. It also has high potential, but unlike its competitors, uses documentation that is difficult to understand, which in turn makes it difficult to estimate its real capabilities.

Conclusion

Automating mechanical and repetitive actions within organizations has become essential for gaining competitive advantages, as it optimizes the utilization of human capital and redirects it towards more fulfilling activities.

Despite the emergence of language models like ChatGPT, specialized platforms like Dialogflow and Lex remain relevant for developing robust chatbot applications. As shown in the previous examples, these platforms offer pre-built components and tools to effectively create conversational interfaces.