In brief

- For software-defined vehicles, sensor calibration is crucial for obtaining accurate data, ensuring trustworthy reference systems and enabling advanced features such as autonomous driving

- Proper calibration enables LiDAR ego-motion correction, improves ground truth data generation through sensor fusion, and plays a pivotal role in achieving SAE Level 3+ autonomous driving

- Zoreza Global offers comprehensive calibration services ensuring hassle-free and highly accurate sensor calibration

Imagine your car makes an emergency brake on the highway because a vehicle ahead is incorrectly identified as in your lane, although it’s driving in a different lane. Not ideal, right? That’s why precise sensor calibration is paramount. In this blog, you’ll learn what calibration is, how it is used and how you can get your systems calibrated with the least amount of effort. We’ll also show which services Zoreza Global can offer to make the most out of your car’s sensors.

Hit the process, not the obstacle

In modern software-defined vehicles, many systems for intelligent driving assistants and autonomous driving (AD) features rely on accurate sensor data — cameras, radar and LiDAR sensors are the 'eyes' of a modern car. But the best sensor is useless if its output data is unreliable and deteriorated by the postprocessing itself. Calibration is the key to usable sensor data: Only with meticulously calibrated sensors, will you be able to build trustworthy reference systems that help you pass validations, fulfil regulations and enable SAE Level 3+ autonomous driving.

Calibration 101 — the basics

First, let’s define the basic ideas and principles.

What is (sensor) calibration?

For those of us who are dealing with measurements and testing of technology, sensor calibration is a procedure to interpret sensor data in a sensible way.

In the automotive industry in particular, we often face two different types of calibration: Intrinsic and extrinsic calibration.

Intrinsic calibration is the act of finding inherent parameters that are necessary for the interpretation of raw sensor data.

Typical use cases are intrinsic camera calibration for rectifying distorted images and correcting known distance measurement inaccuracies of LiDAR systems.

Extrinsic calibration is the act of finding the lateral and rotational offset between a sensor coordinate system and another coordinate system (e.g., another sensor, the vehicle coordinate system).

Typical use cases include sensor fusion, where measurements stemming from different types of sensors are combined to improve accuracy and sensor validation, where the output of a device under test (DUT) is compared with ground truth (GT) data.

When to calibrate

During the ADAS development process calibration comes in at various stages. These two are indispensable:

- Bringing test vehicles into service

The step from prototype to serial production can be tedious. With our solid expertise in designing and building prototypes and validation systems it becomes much easier. Use our all-in-one solution for easy and seamless calibration of integrated reference sensors and DUTs into test vehicles - Re-calibrating regularly and on-demand

From time to time, or on a regular basis, sensors and validation systems call for a re-calibration. With our flexible services we can support at your premises anywhere in the world with short notice

What’s next?

Calibration is not an end in itself. It’s a necessary step in achieving one or more of the following goals:

Enabling LiDAR ego-motion correction

A LiDAR system captures environmental data while rotating. Due to this rotation, point cloud data from different directions is extracted at different points of time. In combination with the motion of both the ego-vehicle and the observed object, this leads to an effect similar to the rolling-shutter effect (for a more information, see this explanation). With correctly adapted calibration, this effect can be minimized so that ground truth data from LiDAR sensors is more accurate.

Improving ground truth data generation via sensor fusion

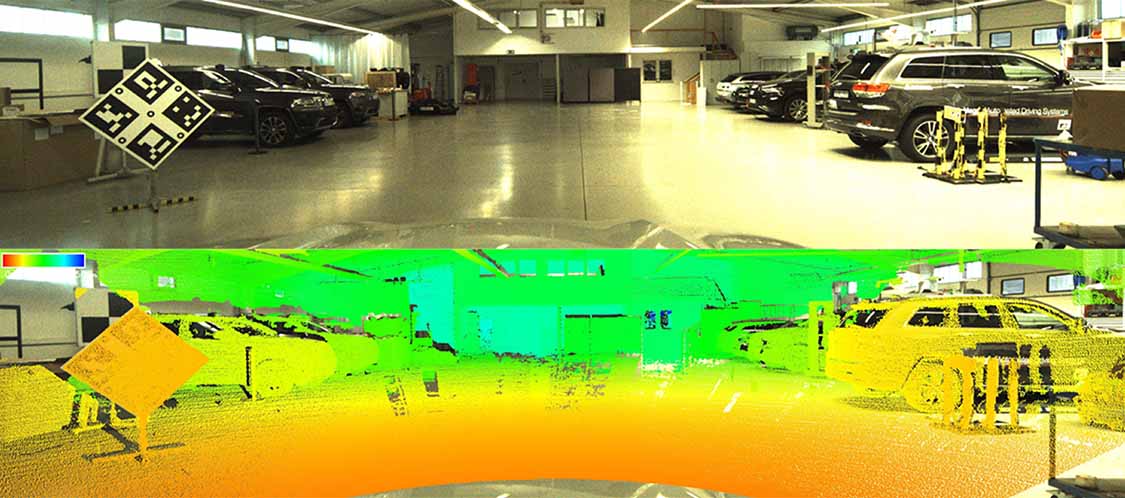

The generation of GT by annotation of LiDAR point cloud data can be very challenging. As such data looks very abstract, the fusion of LiDAR and camera systems can simplify this process. 3D point cloud data and 2D camera images are fused together to create 5D data. This more detailed data enables the extraction of more accurate GT objects. With this approach, Zoreza Global supports the trend toward future systems based on central ECU sensor fusion — “first fuse then detect” instead of “first detect then fuse”.

The fusion of multiple sensors of different types requires highly accurate calibration of all relevant sensors.

Calibration between LiDAR and camera: 5D data generation through sensor fusion

Optimizing KPI accuracy

The comparison of DUT results and GT objects requires the application of a common coordinate system, usually the vehicle coordinate system (VCS). Transferring object positions into another coordinate system is error prone — this is especially true for small angular inaccuracies which lead to major offsets for large-distanced objects.

Keep in mind that the calculation of KPIs on such error-prone data can be highly adulterated. If a calculation is faulty, both sides can suffer: You may treat a perfectly functioning system as faulty, or a faulty system may go unnoticed.

How our calibration service works

The calibration process consists of three phases:

1. Preparation phase

During the preparation phase — which can take one or more days, depending on your requirements — the scale and conditions of the project are defined; requirements, sensor specifications and calibration methods are checked; and a sensor parser is prepared, which involves the connection of the client toolchain to our calibration toolchain.

2. Calibration phase

This is a one-day endeavor. We pick up your test vehicle and measurement system and our calibration experts perform the entire calibration process. Because there is no need for a dedicated calibration facility, we can offer the calibration services that you need anywhere in the world. You don’t have to be involved in the calibration process at all. When all the calibration is done, we hand back your vehicle and equipment.

3. Result feedback

As soon as all the calibration data is recorded, our experts process the data and turn it into a calibration result report containing the calibration matrices, which is then provided to you.

As you can see, the effort on your side is at a minimum. You neither need to have calibration algorithms nor a test team. You can rely on our longstanding experience and achieve your results within a few days.

Our portfolio

Zoreza Global offers four kinds of calibration service:

- High precision measurement for offset between LiDAR and inertial measurement unit (IMU)

Get the position of the LiDAR system with less than one millimeter offset relative to the IMU, enabling high-precision ego-motion correction - Intrinsic camera calibration for distortion removal

Get the intrinsic parameters of your camera. A measurement is done using a calibration pattern or our special-purpose calibration stand to determine and remove the lens distortion effect - Cross-sensor calibration for data fusion

Get a calibration between two sensors, like LiDAR to camera, LiDAR to LiDAR, or radar to camera to enable sensor fusion between multiple sensors and types - KPI calculation based on sensor-to-VCS calibration

Get the calibration of a sensor toward the vehicle coordinate system to avoid misleading KPIs measuring your DUT performance. Also, validate your DUT self-calibration by comparing it with our DUT-to-VCS calibration

Highly accurate sensor calibration: The LiDAR point cloud data perfectly matches to the camera image.

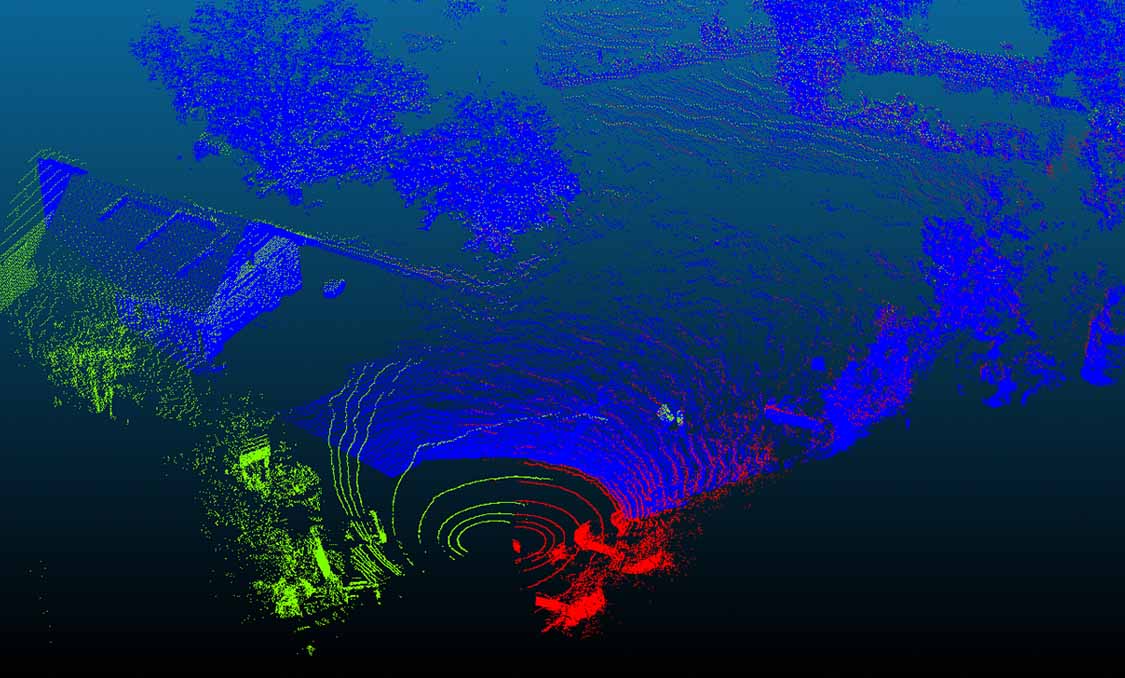

Multi-sensor-calibration of LiDAR sensors enables a wider field of view and higher data density

Sensor calibration may not sound like a big topic, but it is an important one. With more and different kinds of sensors, the complexity increases, and you may struggle with the challenge to calibrate the sensor system holistically.

We have already proven our expertise in various customer and research projects. As part of the BMWK-funded project KI Delta Learning, which aimed to efficiently generate new training data for automotive AI algorithms , we were responsible for the complete calibration process of the comprehensive reference sensor setup. Focusing on the evaluation of multi-sensor-fusion by optimizing sensor positions and time synchronization, it was possible to provide an exceptionally high quality of reference data through our calibration toolchain.

As a global automotive software systems integrator with deep domain expertise, we can help you manage that complexity, enabling you to differentiate your brand. Contact us to discuss your needs.